Welcome to my blog! I’m Jan Wilhelm T. Sy, a Computer Science student from Bicol University.

Over the past few weeks, I’ve had the incredible opportunity to undertake an On-the-Job Training (OJT) at Iraya Energies.

This experience will be a pivotal part of my academic journey, allowing me to apply and expand my knowledge in real-world settings.

Over the past few weeks, I’ve had the incredible opportunity to undertake an On-the-Job Training (OJT) at Iraya Energies.

This experience will be a pivotal part of my academic journey, allowing me to apply and expand my knowledge in real-world settings.

Chatbot

Testing &

Optimization

Testing &

Optimization

Welcome to my blog! This week marks the fifth week of my On-the-Job Training (OJT) program, focusing on the development of a chatbot. From July 8, 2024, to July 12, 2024. I engaged in the process of improving, testing, and optimizing the chatbot.

This week marked the continuation of my On-the-Job Training (OJT) program, focusing on chatbot development. My main goal for the week is to improve the chatbot, including its features, as well as test and optimize the chatbot

This week marked the continuation of my On-the-Job Training (OJT) program, focusing on chatbot development. My main goal for the week is to improve the chatbot, including its features, as well as test and optimize the chatbot

ACTIVITY PERFORMED

In coordination with the mentorship plan, this week activity heavily revolves around chatbot improvement, testing, and optimization

Chatbot Implementation and Enhancements

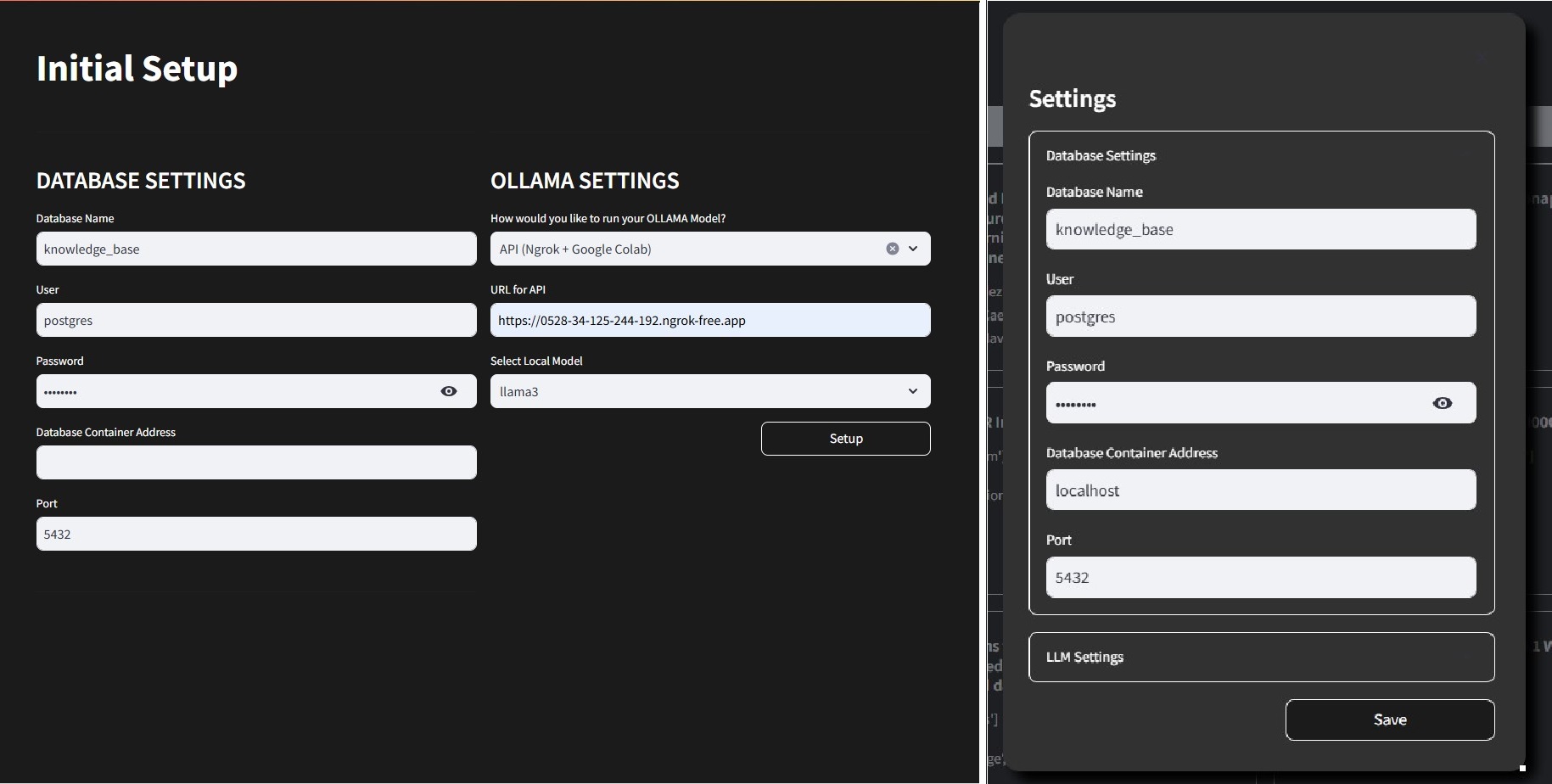

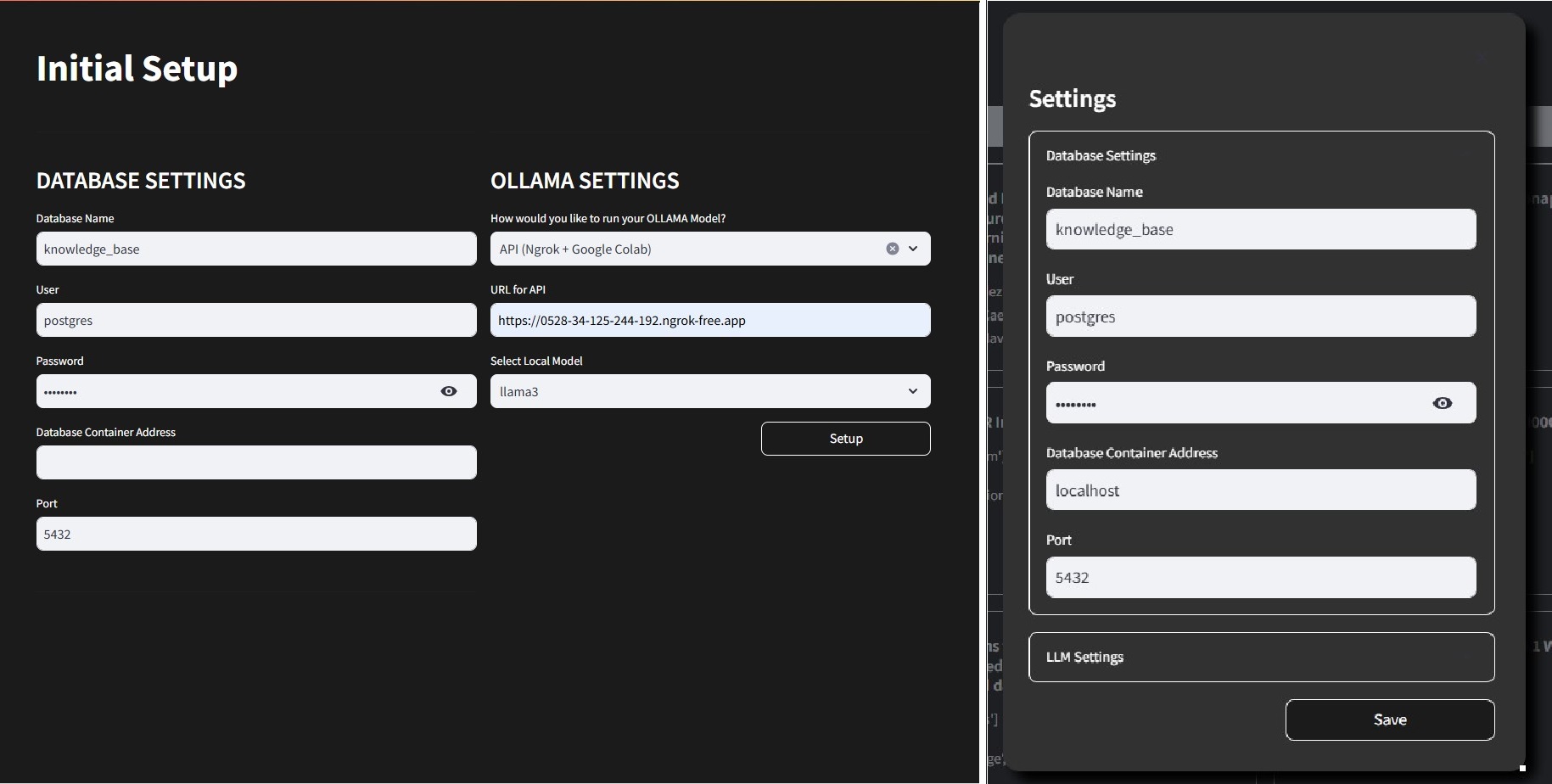

I changed the method on how we load the LLM. Previously, we are loading the LLM through HuggingFace locally or through an API. Now, I am using Ollama to load the model, this can be done locally or through an API using Google Colab and Ngrok. With this change, I had also changed the LLM from Phi-3-mini to Llama3-8B

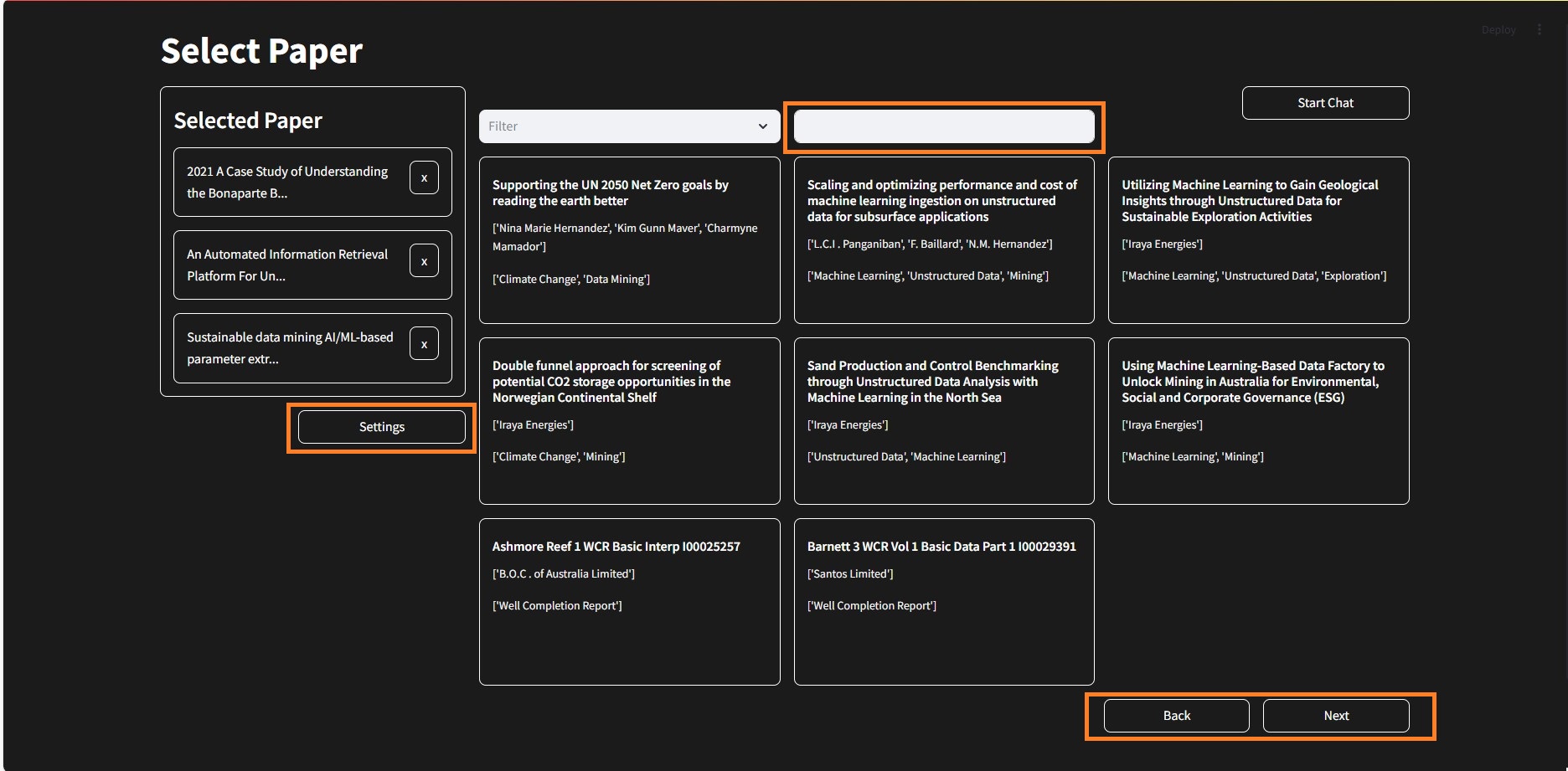

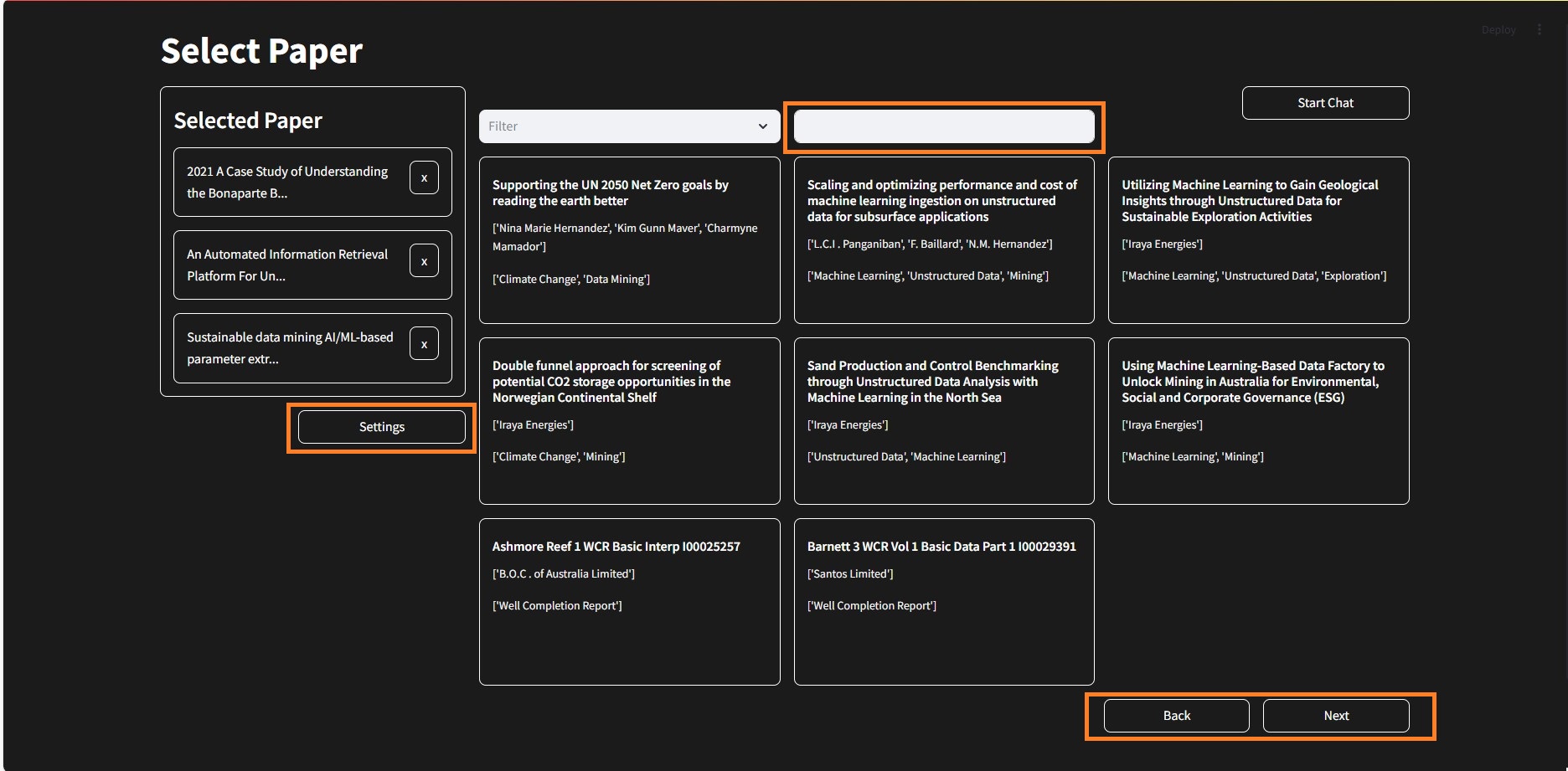

Since this is also my 5th week of OJT, I focused significantly on improving our chatbot implementation and enhancing user experience. I refined and improved the chatbot system, aiming to enhance both the functionality and user interactions. I implemented new features such as a search bar within the Chatbot Document Select Page to facilitate easier information retrieval. Additionally, I added the next and previous page functionalities to streamline document navigation, ensuring users could access content more intuitively.

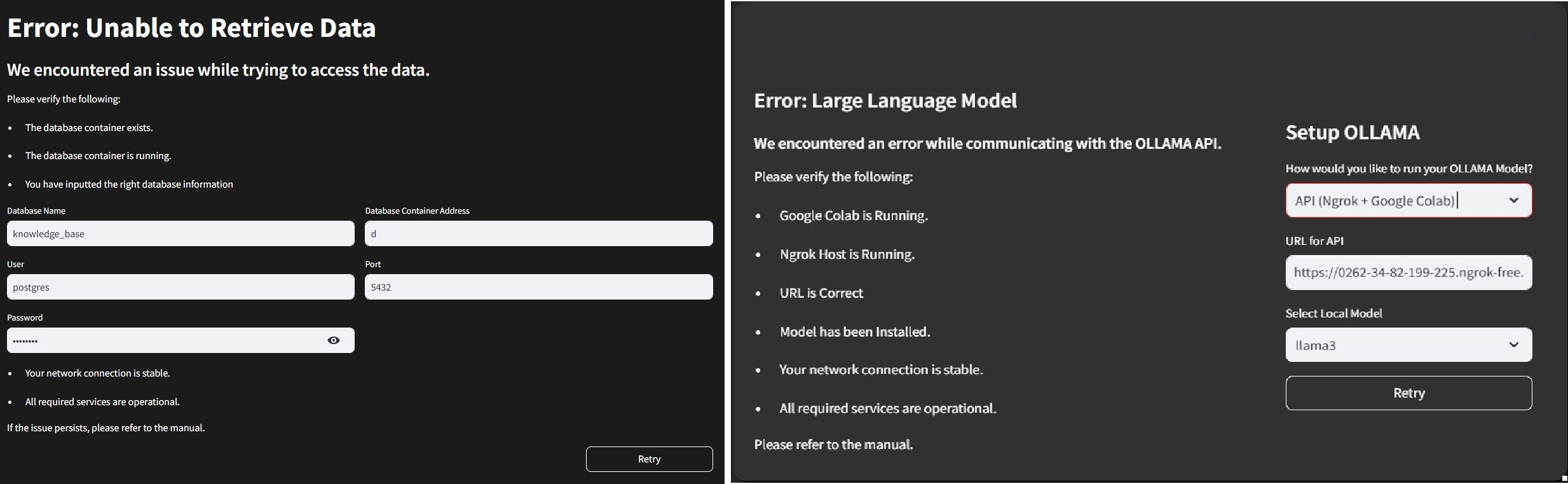

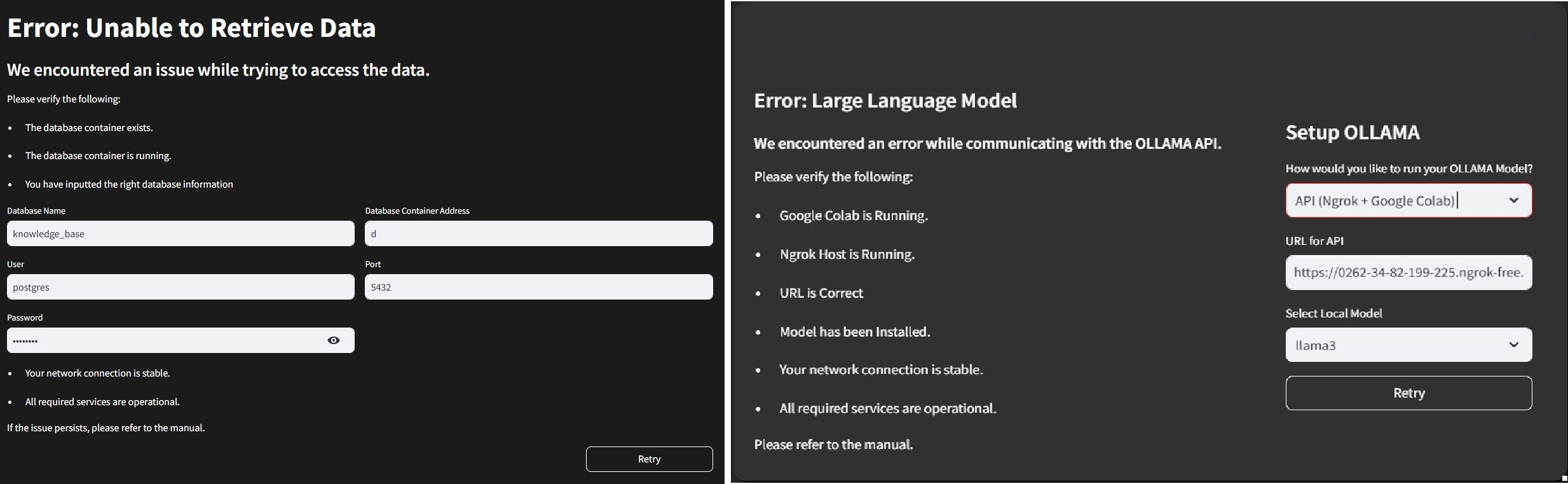

I also enhanced the chatbot system by integrating new features. I implemented components that allow users to adjust settings for both the Language Model (LLM) and the database, providing greater flexibility and customization. Additionally, I implemented some error handling mechanisms to improve the system's reliability and user experience.

Since this is also my 5th week of OJT, I focused significantly on improving our chatbot implementation and enhancing user experience. I refined and improved the chatbot system, aiming to enhance both the functionality and user interactions. I implemented new features such as a search bar within the Chatbot Document Select Page to facilitate easier information retrieval. Additionally, I added the next and previous page functionalities to streamline document navigation, ensuring users could access content more intuitively.

I also enhanced the chatbot system by integrating new features. I implemented components that allow users to adjust settings for both the Language Model (LLM) and the database, providing greater flexibility and customization. Additionally, I implemented some error handling mechanisms to improve the system's reliability and user experience.

Research and Experimentation

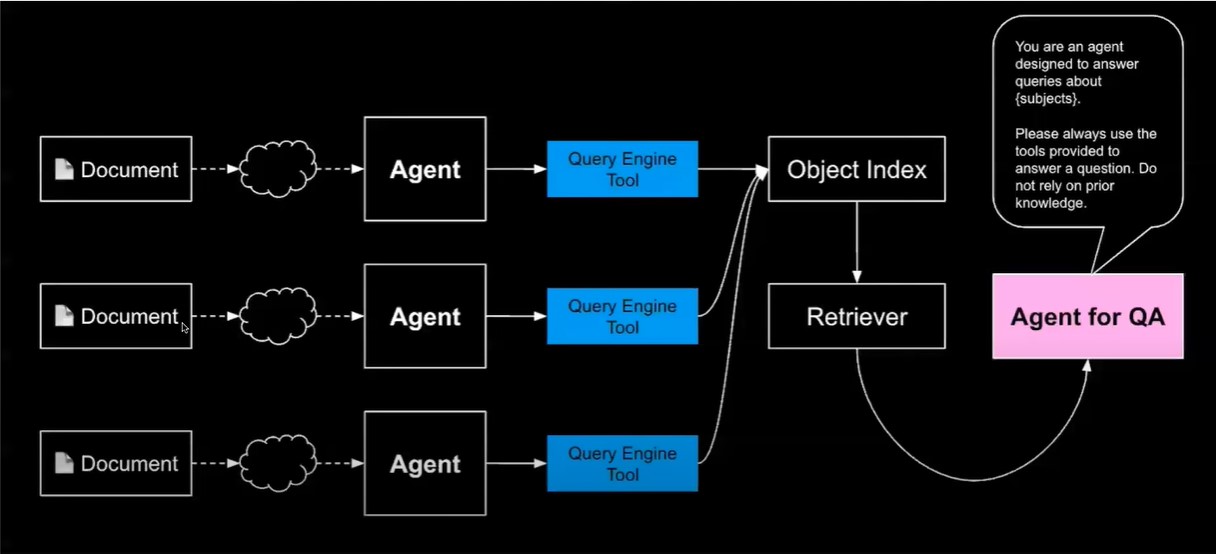

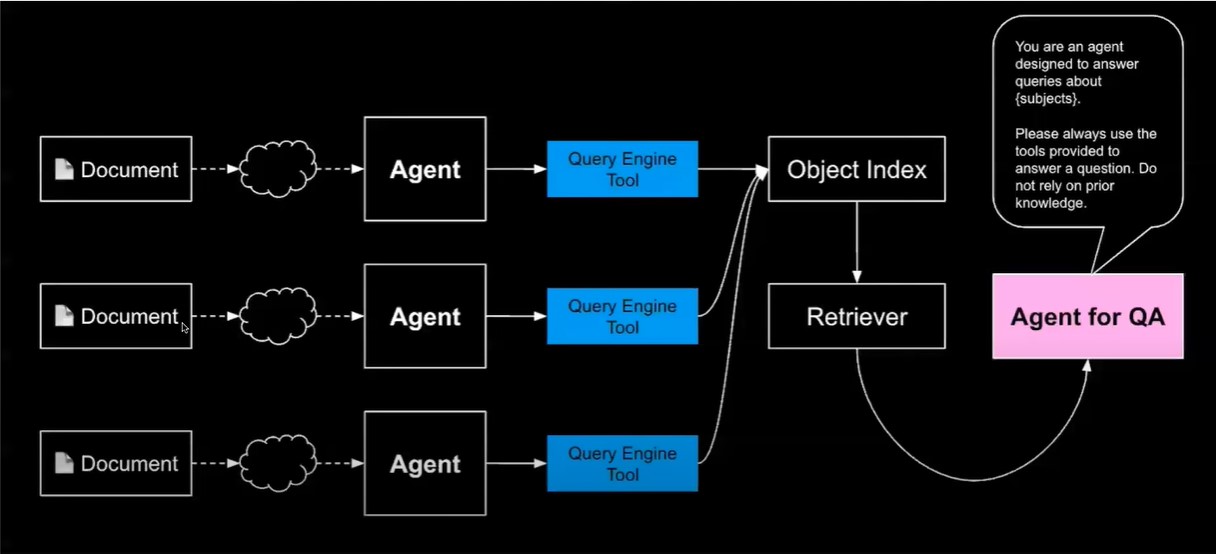

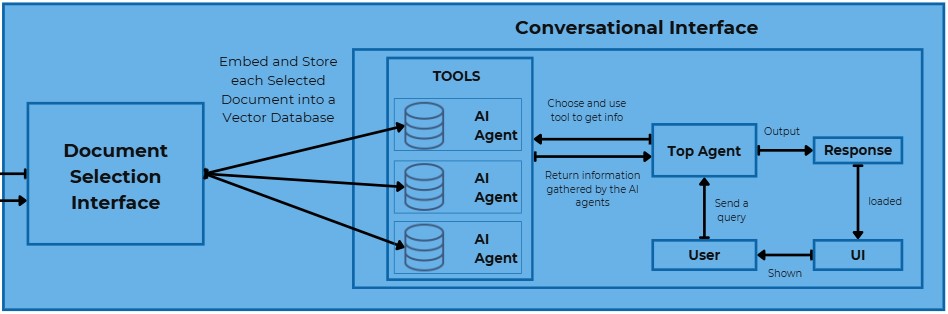

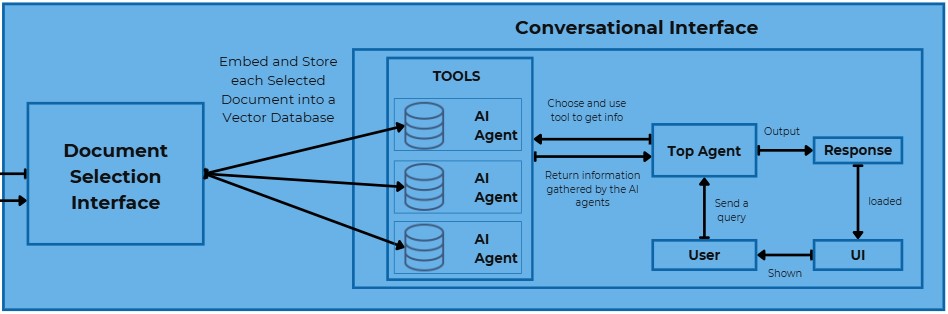

I also did some research on any potential enhancements or alternatives for our chatbot system. One key area of mu exploration was Llama Index, which piqued my interest as a potential alternative for the current LangChain Implementation for language processing tasks. This research aimed to identify the capabilities and advantages Llama Index could bring to our system. Furthermore, I delved into studying AI agents to improve our chatbot's ability to handle user queries effectively since users will be able to select multiple documents at a time. This involved researching and testing on multi document agents, which was inspired by Llama Index.

Image from Llama Index

Image from Llama Index

Image from Llama Index

Image from Llama Index

Retrieval Augmented Generation (RAG) and AI Integration

During this time, I focused on a main component of the chatbot, which is setting up Retrieval Augmented Generation (RAG). It started with creating a basic RAG framework and then moving on to adding AI agents into the mix. The goal was to use AI algorithms to help the chatbot give more relevant responses. This was done to help the chatbot to determine which documents it should focus on when providing a reply to the query of the users.

System Integration and Optimization

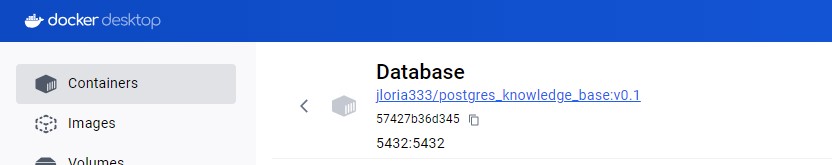

I also worked on integrating the database with the chatbot setup, to make data handling more efficient and easier. I had pulled the database docker image from the repo and ran it in my Docker Hub. The data is now shown in the Document Select Page where the user would be able to select document(s) that they want to talk about.

Additionally, I looked into using LLMCompiler from Llama Index to speed up response times by using parallel function calls. This meant trying out different methods to see which ones made responses faster and more efficient.

Additionally, I looked into using LLMCompiler from Llama Index to speed up response times by using parallel function calls. This meant trying out different methods to see which ones made responses faster and more efficient.

Fine-Tuning and Adjustments

Throughout my work on these technical and research tasks, I focused on fine-tuning and adjusting various aspects of our chatbot system. This included making sure that prompts used by AI agents were consistent and effective in interactions with users. Additionally, I adjusted the temperature parameter for the language model to 0.2, which helped strike a good balance between keeping responses creative yet relevant.

To address concerns about scalability, I took proactive steps by removing the paper preview feature from the Chatbot Document Select Page. This decision was aimed at improving overall system performance and ensuring smooth scalability as our chatbot usage continues to grow.

To address concerns about scalability, I took proactive steps by removing the paper preview feature from the Chatbot Document Select Page. This decision was aimed at improving overall system performance and ensuring smooth scalability as our chatbot usage continues to grow.

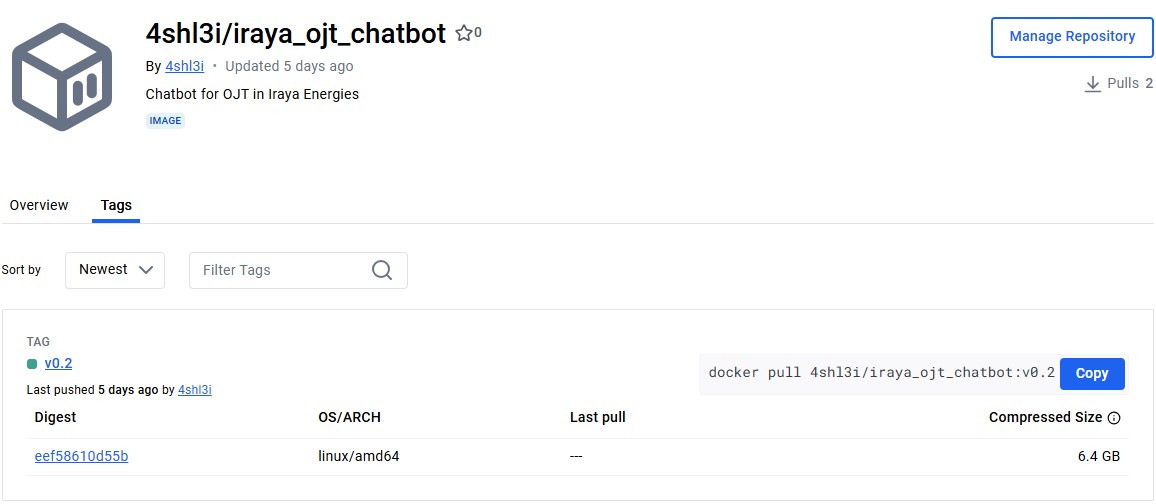

Dockerization

Since the majority of the components have already been integrated, I took the additional step of creating a Docker image and uploading it to my Docker Hub repository. This approach ensures that the application can be deployed easily and efficiently across different environments.

By using this Docker image, users can avoid the cumbersome and time-consuming process of manually installing and configuring each component. This not only streamlines the setup process but also guarantees consistency and reliability in the application’s performance. Additionally, it simplifies updates and maintenance, as any changes can be incorporated into the Docker image and redistributed without affecting the end-user experience.

By using this Docker image, users can avoid the cumbersome and time-consuming process of manually installing and configuring each component. This not only streamlines the setup process but also guarantees consistency and reliability in the application’s performance. Additionally, it simplifies updates and maintenance, as any changes can be incorporated into the Docker image and redistributed without affecting the end-user experience.

In conclusion, I made significant strides in enhancing our chatbot. Key improvements include adding a search bar, navigation functionalities, and researching Llama Index as an alternative to LangChain. I explored AI agents for better query handling, set up a basic RAG framework, and integrated the database for efficient data handling. I also used LLMCompiler for faster responses and fine-tuned prompts and temperature settings, and removed the paper preview feature to improve scalability.

To streamline deployment, I created a Docker image and uploaded it to my Docker Hub repository. This ensures easy, efficient deployment across environments and avoids tedious manual installations, guaranteeing consistent performance and simplifying maintenance.

To streamline deployment, I created a Docker image and uploaded it to my Docker Hub repository. This ensures easy, efficient deployment across environments and avoids tedious manual installations, guaranteeing consistent performance and simplifying maintenance.