Welcome to my blog! I’m Jan Wilhelm T. Sy, a Computer Science student from Bicol University.

Over the past few weeks, I’ve had the incredible opportunity to undertake an On-the-Job Training (OJT) at Iraya Energies.

This experience will be a pivotal part of my academic journey, allowing me to apply and expand my knowledge in real-world settings.

Over the past few weeks, I’ve had the incredible opportunity to undertake an On-the-Job Training (OJT) at Iraya Energies.

This experience will be a pivotal part of my academic journey, allowing me to apply and expand my knowledge in real-world settings.

Chatbot

Development

Development

Welcome to my blog! This week marks the fourth week of my On-the-Job Training (OJT) program, focusing on the development of a chatbot. From July 1, 2024, to July 5, 2024. I engaged in the process of the main chatbot development.

This week marked the continuation of my On-the-Job Training (OJT) program, focusing on chatbot development. My main goal for the week is to develop the chatbot, improving features from the prototype and trying to implement the new design of the chatbot created last week.

This week marked the continuation of my On-the-Job Training (OJT) program, focusing on chatbot development. My main goal for the week is to develop the chatbot, improving features from the prototype and trying to implement the new design of the chatbot created last week.

ACTIVITY PERFORMED

In coordination with the mentorship plan, this week activity heavily revolves around chatbot development.

StreamLit Chatbot Development

This week, I focused on enhancing the chatbot prototype by implementing a new design and adding functionalities. I started by implementing the design created in Figma using StreamLit, making necessary adjustments in Figma to align with StreamLit's capabilities.

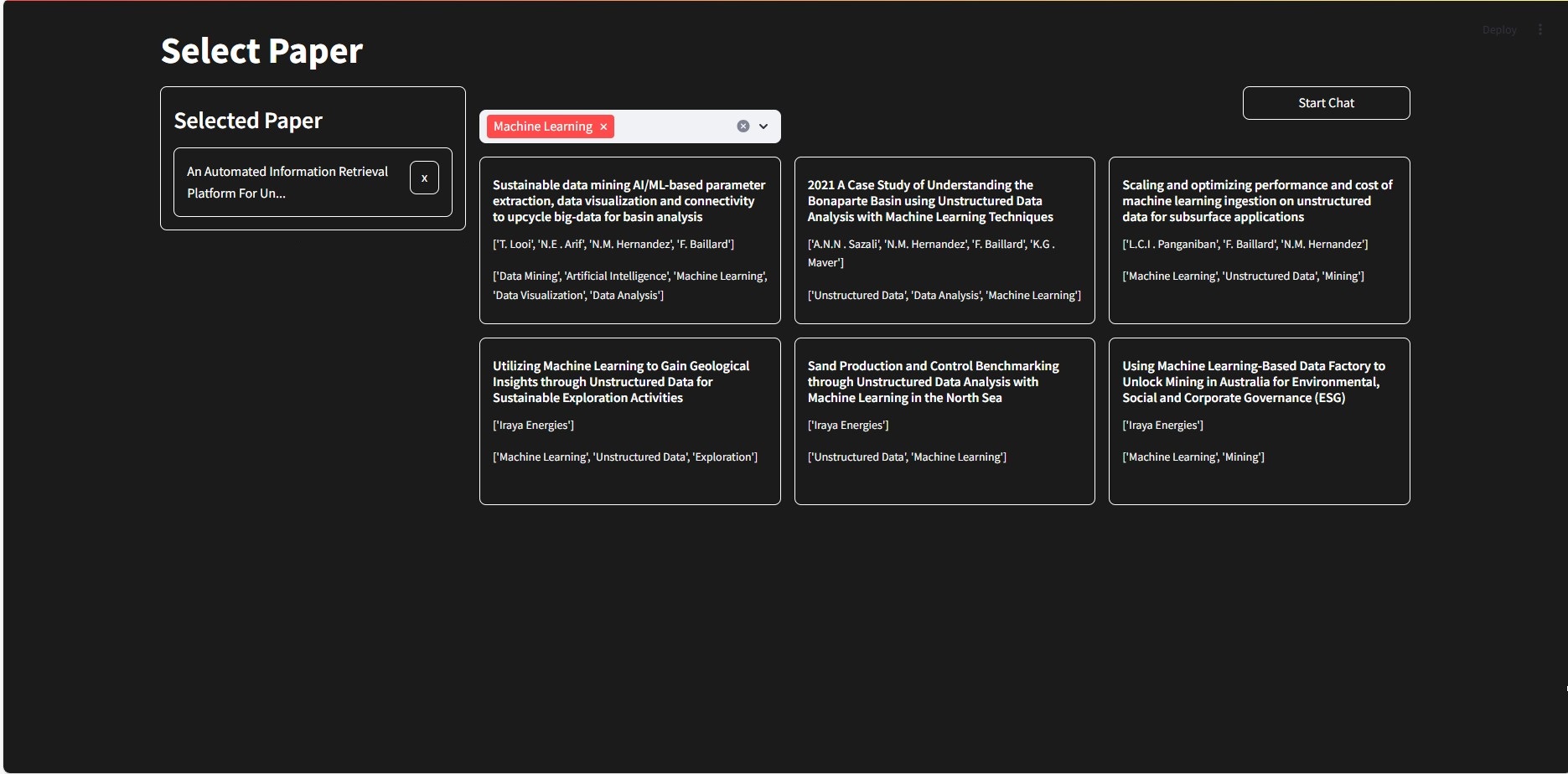

The UI consists of two parts: a document selection interface and the conversational interface. The document selection interface is where users can select up to three papers to ask the LLM model to query with. In this part, I have created a sample dictionary that will have information regarding the document such as title, authors, and tags, and I had to loop through the dictionary to show the user the available documents. Additionally, I had implemented a filter bar so that users can filter the shown papers by their tags.

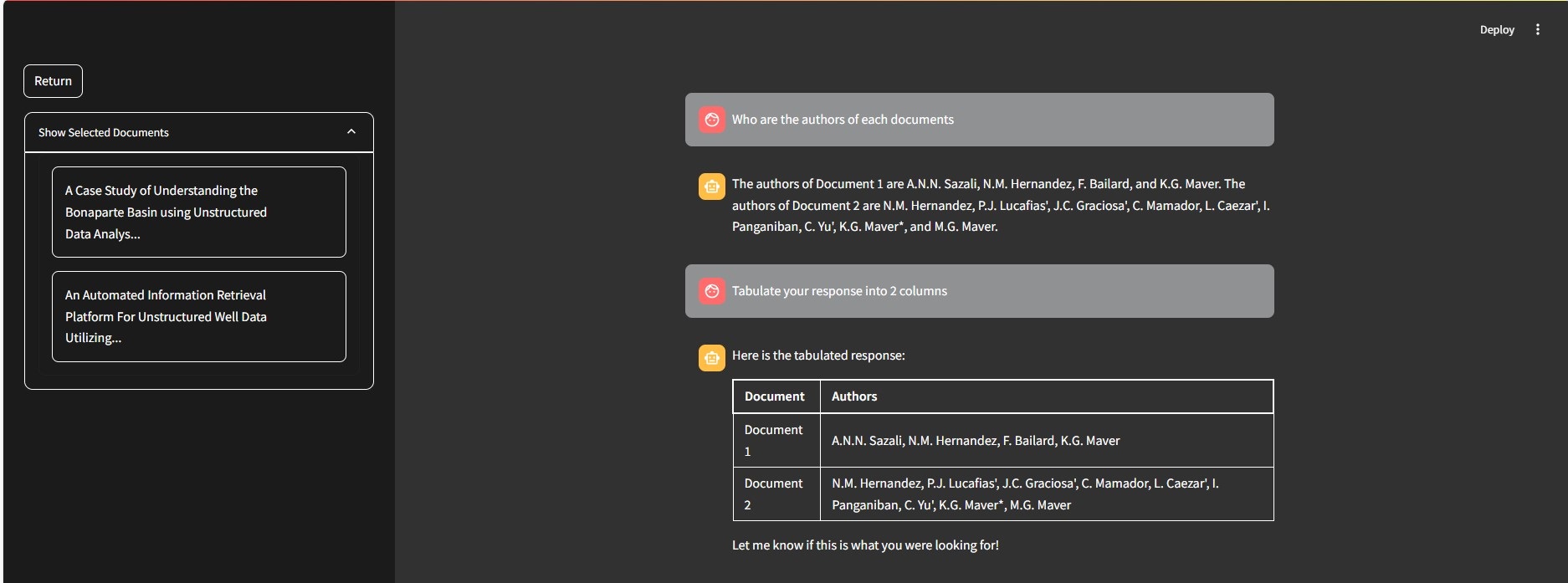

The second part of the UI is the conversational interface. Using Streamlit’s chat components, I was able to create a chatbot interface where users can interact with the LLM. Additionally the selected documents can be seen from the side.

The UI consists of two parts: a document selection interface and the conversational interface. The document selection interface is where users can select up to three papers to ask the LLM model to query with. In this part, I have created a sample dictionary that will have information regarding the document such as title, authors, and tags, and I had to loop through the dictionary to show the user the available documents. Additionally, I had implemented a filter bar so that users can filter the shown papers by their tags.

The second part of the UI is the conversational interface. Using Streamlit’s chat components, I was able to create a chatbot interface where users can interact with the LLM. Additionally the selected documents can be seen from the side.

Additional Research

I explored other open-source models available on the Hugging Face Platform and learned to use their Inference API to speed up the inference process. Instead of loading the model locally, I can now call the API for tasks, making the process more efficient, fast, and lightweight locally.

Additionally, I continued researching ways to improve the RAG System, experimenting with different embedding models and chunk sizes.

Additionally, I continued researching ways to improve the RAG System, experimenting with different embedding models and chunk sizes.

In conclusion, this week's efforts focused on enhancing the chatbot prototype using StreamLit, implementing Figma-designed UI components, and integrating functionalities like the Naive RAG System. The UI now includes a document selector and a chat page. Utilizing Hugging Face's Inference API has improved efficiency, and ongoing research into optimizing the RAG System has further refined the chatbot's performance.